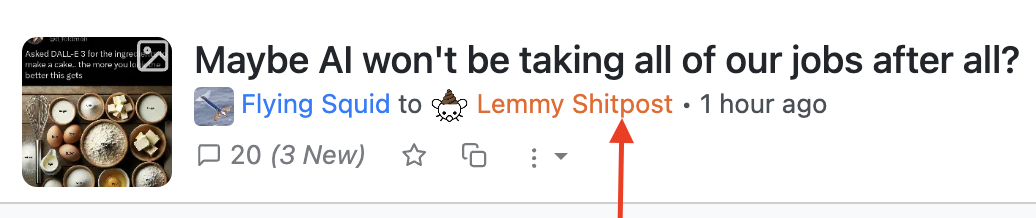

Some tech bro will attempt to make this cake and will tell someone it was better than anything some uppity WOKE human baker could have made, regardless of how bad it turned out.

You can literally taste the fear.

egs

Is that why my cakes keep falling? I thought butger was optional.

You forgot fear

That egg is going to birth some cosmic horror nonsense.

ah yes, using a highly specialized AI intended for image generation to create something that is usually in text form. truly a good judge of the quality of AI…

Who said literally anything about Dall-e’s quality? We’re just laughing at the results because they’re funny.

“Maybe AI won’t be taking all of our jobs after all?”

Learned everything I know from a shitpost. That I did.

The joke is obviously pulling the piss out of those “ai dumb lol” posts.

As far as I see it, the AI decided to label the ingredients

Only an AI would say this.

I like how it just explicitly refuses to label the butger bowls

Because deep down he knows that is butter not butger.

tofu

You can use an electric beater instead of a wire whisk. It makes it extra chewy. :-P

Electric potper

You can’t use the electric potper if you’re using egs.

Certainly not with fear.

vedk, miilk. Sounds like an IKEA inspired cake to me

So many jokes. Including:

…Around the corner fudge is made!

…And sediment shaped sediment.

Maybe AI with purpose of making images won’t be taking cook’s jobs?

deleted by creator

Fear is my favourite type of egg to use in cakes.

Fear of getting salmonella.

Yeah here I was using love. Never thought of fear. I imagine it to have an essence of piss of the pants.

And now for the secret ingredient… fear.

I see we are using the Gordon Ramsay cookbook today. I prefer Justin Wilson, where the secret ingredient is wine for the cook

Personally, I prefer Alton Brown, where the secret ingredient is goofiness.

Personally I prefer Jamie Oliver where all the ingredients are olive oil

I’m a fan of James May’s secret ingredient, yelling “oh cock!” every so often.

I’m a fan of his other secret ingredient. CHEESE.

There’s an episode of Are You Afraid of the Dark from old school Nickelodeon (1992 to 1996) that had this as the exact premise.

You pass butter!

Oh my god…

Yeah welcome to the club

I think you mean butger.

Why is it even called artificial intelligence, when it´s obviously just mindless artificial pattern reproduction?

I guess the same reason why smartphones are called “smart” phones.

Well, I think it comes down to a fundamental belief on consciousness. If you’re non religious, you probably think that consciousness is a purely biological and understandable process. This is complete understandable and should be replicable. Therefore, artificial intelligence. But it’s hard as dong to do well.

Why the hell are you being downvoted? I thought Lemmy had no religious fundamentalists or spiritualists

Because it is intelligent enough to find and reproduce patters. Kind of like humans.

But it is artificial.

Machine Learning is such a better name. It describes what is happening - a machine is learning to do some specific thing. In this case to take text and output pictures… It’s limited by what it learned from. It learned from arrays of numbers representing colours of pixels, and from strings of text. It doesn’t know what that text means, it just knows how to translate it into arrays of numbers… There is no intelligence, only limited learning.

deleted by creator

Only sometimes

sometimes worse

deleted by creator

Machine Learning isn’t a good name for these services because they aren’t learning. You don’t teach them by interacting with them. The developers did the teaching and the machine did the learning before you ever opened the browser window. You’re interacting with the result of learning, not with the learning.

Learnéd Machines

Are we so different?

Isn’t meaning just comparing and contracting similarly learned patterns against each other and saying “this is not all of those other things”.?

The closer you scrutinize meaning the fuzzier it gets. Linguistically at least, though now that I think about it I suppose the same holds true in science as well.

Yes, we absolutely are different. Okay, maybe if you really boil down every little process our brains do there are similarities, we do also do pattern recognition, yes. But that isn’t all we do, or all ML systems do, either. I think you’re selling yourself short if you think you’re just recognising patterns!

The simplest difference between us and ML systems was pointed out by another commenter - they are trained on a dataset and then they remain static. We constantly re-evaluate old information, take in new information, and formulate new thoughts and change our minds.

We are able to perceive in ways that computers just can’t - they can’t understand what a smell is because they cannot smell, they can’t understand what it is to see in the way that we do because when they process images it is exactly the same to a computer as processing any other series of numbers. They do not have abstract concepts to relate recognised patterns to. Generative AI is unable to be truly creative in the way that we can, because it doesn’t have an imagination, it is replicating based on its inputs. Although, again, people on the internet love to say “that’s what artists do”, I think it’s pretty obvious that we wouldn’t have art in the way we do today if that was true… We would still be painting on the walls of caves.

deleted by creator

Because that’s what intelligence is. There’s a very funny video floating around of a squirrel repeatedly trying to bury an acorn in a dog’s fur and completely failing to understand why it’s not working. Now sure, a squirrel is not the smartest animal in the world, but it does have some intelligence, and yet there it is just mindlessly reproducing a pattern in the wrong context. Maybe you’re thinking that humans aren’t like that, that we make decisions by actually thinking through our actions and their consequences instead of just repeating learned patterns. I put it to you that if that were the case, we wouldn’t still be dealing with the same problems that have been plaguing us for millennia.

Honestly I think it’s marketing ai sells better then machine learning programs

AI is also the minmax algorithm for solving tic-tac-toe, and the ghosts that chase Pac-Man around. It’s a broad term. It doesn’t always have to mean “mindblowing super-intelligence that surpasses humans in every conceivable way”. So it makes mistakes - therefore it’s not “intelligent” in some way?

A lot of the latest thought in cognitive science couches human cognition in similar terms to pattern recognition - some of the latest theories are known as “predictive processing”, “embodied cognition”, and “4E cognition” if you want to look them up.